The following sample demonstrates how to compute a histogram on a GPU. CUDA SDK has a histogram sample which works for 64 and 256 bins with code both running on GPU and CPU. SDK CPU code is using bit-manipulation which should be replaced with SSE2 instructions. This article demonstrates 3 simple, entry-level, examples showing the basics of the algorithms allowing you to learn and take it to the next level.

Running times are below, we’ll discuss differences between 3 kernels later.

Data size: 38.147MB (10000000) Serial on CPU in: 18017us (18ms) Copied data to the GPU: 141026us (141ms) histogram 1: 8522.05us (8.52205ms) histogram 2: 8926.75us (8.92675ms) histogram 3: 2072.93us (2.07293ms) Copied matrix to the CPU: 0us (0ms) Kernel on GPU in: 174997us (174ms) Done... Resetting device!

Solution consists of a helper hst class and CUDA hst.cu file containing kernels. Program.cpp has a main function to launch the app.

Hst class looks like this

Entry function into histogram calculations is shown below. Here we create data array and populate with random values, and two arrays to hold histogram values from CPU and GPU calculations.

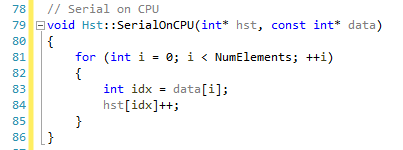

Our CPU calculation is trivial. Random data that we have created does not exceed range of bins and so we are simply computing how many unique values are in our sample.

The function responsible for calling kernels is below. In it, we create two device vectors, one for holding data and another for the histogram, and then invoke 3 kernels one at a time, copy histogram back to the CPU and exit.

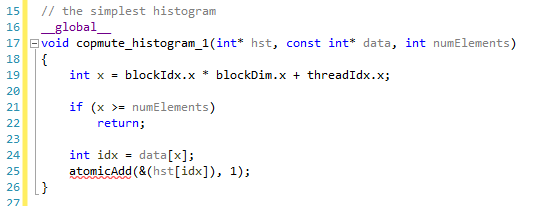

The first kernel looks just like parallel code on CPU. Here we launch as many threads as there are data values, where each thread is responsible for updating global count. Just like on the CPU, to avoid write collisions, writes must be atomic creating highly inefficient code: many threads are trying to update small number of memory locations.

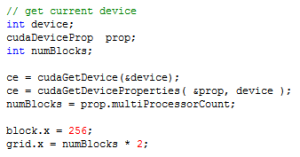

Performance of the second kernel is not much better than of the first one. However, we take a different approach in it by adding a stride factor where each thread can do a little more work than in the first kernel. To launch the kernel, we find out number of multiprocessors on the active GPU and multiply it by 2.

The code is still very inefficient due to write collisions.

The last kernel is using shared memory but only works on a small number of histogram bins where the number of bins cannot exceed the number of threads in the block – in fact they have to be the same. That limits this kernel

to small histograms with maybe 256 bins (could be a little more). Here is where you would want to look at the CUDA SDK kernels that are optimized to work with 256 and 64 bins. Nevertheless, it significantly improves on the previous two kernels. We use the same number of blocks as in kernel 2, but number of threads per block must be the same as number of bins.