This article and code examples are based on chapter 5 of C++ Concurrency in Action book by Anthony Williams. Chapter 4 is described here. You can download the code here.

We will look at low-level details of the C++11 memory model and atomic operations that provide the basis for synchronization between threads through specializations of the std::atomic<> class template, operations on atomic types, and complexity of memory ordering. Also covered are fences and their pairing with operations on atomic types to enforce memory ordering. Finally, we will look at atomic operations to enforce memory ordering between non-atomic operations on separate threads.

C++ memory model makes minimal guarantees about semantics of memory access allowing for compiler optimizations that rearrange code and for hardware scheduler that executes instructions out of order.

All data variables in a program are stored in memory and have to be brought to a CPU for a program to operate on it. Computer memory hierarchy is not flat though and data is cached in local CPU caches to speed up performance (see references below). There is no problem when different threads operate on different memory locations, but the problems start arising when these threads write into the same memory that other threads read from. To ensure data consistency, ordered access to the memory location between threads that try to read and modify a variable must be enforced. In C++ there are helper structures like mutexes that can help with that. You can also use low level atomic operations to enforce ordering between threads.

I’ll refer further details to the book and to your own research.

Solution has 13 projects shown here.

Spinlock mutex using std::atomic_flag

std::atomic_flag is a Boolean flag and can be in one of two states: set or clear. It is deliberately simple and is only intended as a building block; it should never be used except under very special circumstances. If used, it can be combined with std::lock_guard<>. By its very nature it does a busy-wait in lock(), so it’s a poor choice if you expect any degree of contention, but it’s enough to ensure mutual exclusion.

Reading and writing variables from different threads using atomic_bool

The most basic of the atomic integral types is std::atomic<bool>. Although it’s still not copy-constructible or copy-assignable, you can construct it from a nonatomic bool, so it can be initially true or false, and you can also assign to instances of std::atomic<bool> from a nonatomic bool.

Operation ordering guarantees

The order in which you write your code is not the same in which it will be executed. Both compiler and then hardware will optimize your code and find efficiencies.

The happens-before relationship is the basic building block of operation ordering in a program; it specifies which operations see the effects of which other operations. For a single thread, it’s largely straightforward: if one operation is sequenced before another, then it also happens-before it. This means that if one operation (A) occurs in a statement prior to another (B) in the source code, then A happens-before B.

If the operations occur in the same statement, in general there’s no happens-before relationship between them, because they’re unordered. This is just another way of saying that the ordering is unspecified. You know that the program in the following listing will output “1,2” or “2,1”, but it’s unspecified which, because the order of the two calls to get_num() is unspecified.

Sequentially consistent ordering

The default ordering is named sequentially consistent because it implies that the behavior of the program is consistent with a simple sequential view of the world. If all operations on instances of atomic types are sequentially consistent, the behavior of a multithreaded program is as if all these operations were performed in some particular sequence by a single thread. This is by far the easiest memory ordering to understand, which is why it’s the default: all threads must see the same order of operations. This makes it easy to reason about the behavior of code written with atomic variables. You can write down all the possible sequences of operations by different threads, eliminate those that are inconsistent, and verify that your code behaves as expected in the others. It also means that operations can’t be reordered; if your code has one operation before another in one thread, that ordering must be seen by all other threads.

The following listing shows sequential consistency in action. The loads and stores to x and y are explicitly tagged with memory_order_seq_cst, although this tag could be omitted in this case because it’s the default.

Relaxed ordering

Operations on atomic types performed with relaxed ordering don’t participate in synchronizes-with relationships. Operations on the same variable within a single thread still obey happens-before relationships, but there’s almost no requirement on ordering relative to other threads. The only requirement is that accesses to a single atomic variable from the same thread can’t be reordered; once a given thread has seen a particular value of an atomic variable, a subsequent read by that thread can’t retrieve an earlier value of the variable. Without any additional synchronization, the modification order of each variable is the only thing shared between threads that are using memory_order_relaxed.

To demonstrate just how relaxed your relaxed operations can be, you need only two threads, as shown in the following listing.

This time the assert can fire, because the load of x can read false, even though the load of y reads true and the store of x happens before the store of y. x and y are different variables, so there are no ordering guarantees relating to the visibility of values arising from operations on each.

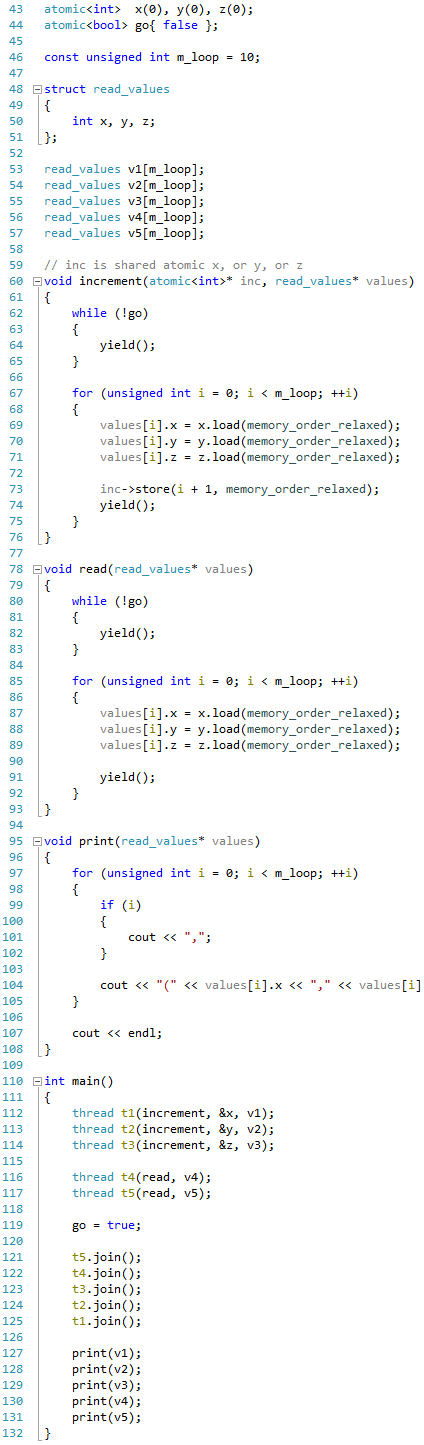

More complex example of relaxed ordering

This program has three shared global atomic variables x, y, z and five threads. Each thread loops 10 times, reading the values of the three atomic variables using memory_order_relaxed and storing them in an array. Three of the threads each update one of the atomic variables every time through the loop, while the other two threads just read. Once all the threads have been joined, values from the arrays stored by each thread are printed. The atomic variable go is used to ensure that the threads all start the loop as near to the same time as possible. Launching a thread is an expensive operation, and without the explicit delay, the first thread may be finished before the last one has started. Each thread waits for go to become true before entering the main loop, and go is set to true only once all the threads have started.

Possible output:

(0,0,0),(1,2,2),(2,2,2),(3,2,2),(4,3,3),(5,3,3),(6,3,3),(7,3,3),(8,3,3),(9,3,3)

(1,0,0),(1,1,1),(3,2,2),(10,3,3),(10,4,4),(10,5,10),(10,6,10),(10,7,10),(10,8,10),(10,9,10)

(1,1,0),(1,2,1),(4,3,2),(10,4,3),(10,5,4),(10,5,5),(10,5,6),(10,5,7),(10,5,8),(10,5,9)

(1,0,0),(2,2,2),(3,2,2),(4,2,2),(5,3,3),(6,3,3),(6,3,3),(7,3,3),(8,3,3),(9,3,3)

(1,2,2),(2,2,2),(3,2,2),(4,3,3),(5,3,3),(6,3,3),(7,3,3),(8,3,3),(9,3,3),(10,3,3)

The first three lines are the threads doing the updating, and the last two are the threads doing just reading. Each triplet is a set of the variables x, y and z in that order from one pass through the loop. There are a few things to notice from this output:

1. The first set of values shows x increasing by one with each triplet, the second set has y increasing by one, and the third has z increasing by one.

2. The x elements of each triplet only increase within a given set, as do the y and z elements, but the increments are uneven, and the relative orderings vary between all threads.

Acquire-release ordering

Acquire-release ordering is a step up from relaxed ordering; there’s still no total order of operations, but it does introduce some synchronization. Under this ordering model, atomic loads are acquire operations (memory_order_acquire), atomic stores are release operations (memory_order_release), and atomic read-modify-write operations (such as fetch_add() or exchange()) are either acquire, release, or both (memory_order_acq_rel). Synchronization is pairwise, between the thread that does the release and the thread that does the acquire. A release operation synchronizes-with an acquire operation that reads the value written. This means that different threads can still see different orderings, but these orderings are restricted.

Imposing ordering on relaxed operations

If you change the store to y to use memory_order_release and the load from y to use memory_order_acquire like in the following listing, then you actually impose an ordering on the operations on x. Eventually, the load from y will see true as written by the store. Because the store uses memory_order_release and the load uses memory_order_acquire, the store synchronizes with the load. The store to x happens-before the store to y, because they’re in the same thread. Because the store to y synchronizes-with the load from y, the store to x also happens-before the load from y and by extension happens before the load from x. Thus the load from x must read true, and the assert can’t fire. If the load from y wasn’t in a while loop, this wouldn’t necessarily be the case; the load from y might read false, in which case there’d be no requirement on the value read from x.

In order to provide any synchronization, acquire and release operations must be paired up. The value stored by a release operation must be seen by an acquire operation for either to have any effect. If either the store or the load was a relaxed operation, there’d be no ordering on the accesses to x, so there’d be no guarantee that the load would read true, and the assert could fire.

Transitive synchronization

In mathematics, a binary relation R over a set X is transitive if whenever an element a is related to an element b, and b is in turn related to an element c, then a is also related to c. Transitivity is a key property of both partial order relations and equivalence relations.

In order to think about transitive ordering, you need at least three threads. The first thread modifies some shared variables and does a store-release to one of them. A second thread then reads the variable subject to the store-release with a load-acquire and performs a store-release on a second shared variable. Finally, a third thread does a load-acquire on that second shared variable. Provided that the load-acquire operations see the values written by the store-release operations to ensure the synchronizes-with relationships, this third thread can read the values of the other variables stored by the first thread, even if the intermediate thread didn’t touch any of them.

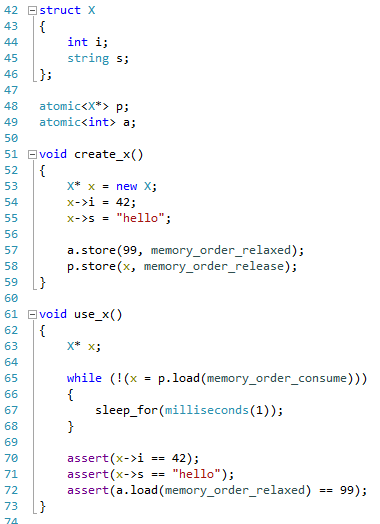

Synchronizing with std::memory_order_consume

The dependency-ordered-before relationship can apply between threads. It’s introduced by using atomic load operations tagged with memory_order_consume. This is a special case of memory_order_acquire that limits the synchronized data to direct dependencies; a store operation A tagged with memory_order_release, memory_order_acq_rel, or memory_order_seq_cst is dependency-ordered-before a load operation B tagged with memory_order_consume if the consume reads the value stored. This is as opposed to synchronizes-with relationship if the load uses memory_order_acquire. If this operation B then carries-a-dependency-to some operation C, then A is also dependency-ordered-before C.

This wouldn’t actually do you any good for synchronization purposes if it didn’t affect the inter-thread happens-before relation, but it does: if A is dependency-ordered-before B, then A also inter-thread happens-before B.

One important use for this kind of memory ordering is where the atomic operation loads a pointer to some data. By using memory_order_consume on the load and memory_order_release on the prior store, you ensure that the pointed-to data is correctly synchronized, without imposing any synchronization requirements on any other nondependent data. This means that the store to p only happens-before those expressions that are dependent on the value loaded from p and the asserts on the data members of the X structure are guaranteed not to fire because the load of p carries a dependency to those expressions through the variable x. On the other hand, assert on the value of a may or may not fire; this operation isn’t dependent on the value loaded from p, and so there’s no guarantee on the value that’s read. This is particularly apparent because it’s tagged with memory_order_relaxed.

Reading values from a queue with atomic operations

One way to handle things would be for a thread producing data to store the items in a shared buffer and then do count.store(number_of_items, memory_order_release) which will allow other threads to know that the data is available. Threads consuming the queue items might then do count.fetch_sub(1, memory_order_acquire) to claim an item from the queue, prior to actually reading the shared buffer. Once the count becomes zero, there are no more items, and the thread must wait.

Using fences to order relaxed operations

Fences are operations that enforce memory-ordering constraints without modifying any data and are typically combined with atomic operations that use the memory_order_relaxed ordering constraints. Fences are global operations and affect the ordering of other atomic operations in the thread that executed the fence. Fences are also commonly called memory barriers, and they get their name because they put a line in the code that certain operations can’t cross. Relaxed operations on separate variables can usually be freely reordered by the compiler or the hardware. Fences restrict this freedom and introduce happens-before and synchronizes-with relationships that weren’t present before.

Note that both fences in the code below are necessary: you need a release in one thread and acquire in another to get a synchronizes-with relationship.

Using atomics to order operations on non-atomic data

Fences prevent compiler or hardware from reordering non-atomic operations.

References

|

1. |

atomic Weapons: The C++ Memory Model and Modern Hardware; Herb Sutter |

|

2. |

C++ Concurrency in Action on Amazon |

|

3. |

|

|

4. |

|

|

5. |

Memory Models; Princeton |

|

6. |

Foundations of the C++ Concurrency Memory Model Hans-J. Boehm, HP Laboratories Sarita V. Adve, University of Illinois at Urbana-Champaign |

|

7. |

C++ Memory Model: Motivation and Explanation of the Model Martin Kempf University of Applied Sciences Rapperswil (HSR), Switzerland 8/1/2013 Master Seminar: Program Analysis and Transformation http://wiki.ifs.hsr.ch/SemProgAnTr/files/cppMemoryModel_Presentation_08_01_13.pdf |

|

8. |

Memory model |

|

9. |

|

|

10. |

Multicore Programming: C++0x Mark Batty, University of Cambridge in collaboration with Scott Owens, Susmit Sarkar, Peter Sewell, Tjark Weber November, 2010 |

|

11. |

Happened-before |

|

12. |

Weak ordering |

|

13. |

Transitive relation |